|

| Elliott Sound Products | Analogue vs Digital |

Main Index

Main Index

Articles Index

Articles Index

The title may not make sense to you at first, because it's obvious that digital exists. You are reading this on a (digital) computer, and the contents of the page were sent via a worldwide digital data network. You can buy digital logic ICs, microprocessors and the like, and they are obviously digital ... or are they? For some time, it's been common to treat logic circuits as being digital, and no knowledge of analogue electronics as we know it was needed. Logic diagrams, truth tables and other tools allowed people to design a digital circuit with no knowledge of 'electronics' at all. Indeed, in some circles this was touted as being a distinct advantage - the subtle nuances of analogue could be ignored because the logic took care of everything. Once you understood Boolean algebra, anything was possible.

The closest that many people come (to this day) to the idea of analogue is when they have to connect a power supply to their latest micro-controller based creation. When it comes to the basics like making the micro interact with the real world, if the examples in the user guide don't cover it, then the user is stopped in his/ her tracks. Even Ohm's law is an unknown to many of those who have only ever known the digital aspect of electronics. Analogue may be eschewed as 'old-fashioned' electronics, and no longer valid with a 'modern' design.

It's common to hear of Class-D amplifiers being 'digital', something that is patently untrue. All of the common digital systems are based on analogue principles. To design the actual circuit for a 'digital' logic gate requires analogue design skills, although much of it is now done by computers that have software designed to optimise the physical geometry of the IC's individual parts.

The simple fact is that everything is analogue, and 'digital' is simply a construct that is used to differentiate the real world from the somewhat illusionary concept that we now call 'digital electronics'. Whether we like it or not, all signals within a microprocessor or other digital device are analogue, and subject to voltage, current, frequency and phase. Inductance, resistance and capacitance affect the signal, and the speed of the digital system or subsystem is determined by how quickly transistors can turn on and off, and the physical distance that a signal may have to travel between one subsystem and another. All of this should be starting to sound very much like analogue by now  .

.

It must be noted that the world and all its life forms are analogue. Nothing in nature is 'black' or 'white', but all can be represented by continuously variable hues and colours (or even shades of grey), different sound pressures in various media or varying temperatures. In analogue electronics these are simply converted to voltage levels. Digital systems have to represent an individual datum point as 'true' or 'false, 'on' or off' or zero and one. This isn't how nature works, but it's possible to represent analogue conditions with digital 'words' - a number of on/ off 'bits'. The more bits we use, the closer we come to being able to represent a continuous analogue signal as a usable digital representation of the original data. However, it doesn't matter how many bits are used, some analogue values will be omitted because analogue is (supposedly) infinite, and digital is not.

In reality, an analogue signal is not (and can never be) infinite, and cannot even have an infinite number of useful voltages between two points. The reason is physics, and specifically noise. However, the dynamic range from softest to loudest, darkest to lightest (etc.) is well within the abilities of an analogue system (such as a human) to work with. A 24 bit digital system is generally enough to represent all analogue values encountered in nature, because there are more than enough sampling points to ensure that the digital resolution includes the analogue noise floor. (Note that a greater bit depth is often needed when DSP (digital signal processing) is performed, because the processing can otherwise cause signal degradation.)

Every digital system that does useful work will involve sensors, interfaces and supervisory circuits. Sensors translate analogue mechanical functions into an electrical signal, interfaces convert analogue to digital and vice versa (amongst other possibilities that aren't covered here), and supervisory circuits ensure that everything is within range. A simple supervisor circuit may do nothing more than provide a power-on reset (POR) to a digital subsystem, or it may be a complete system within itself, using both analogue and digital processing. The important thing to take from this is that analogue is not dead, and in fact is more relevant than ever before.

The development of very fast logic (e.g. PC processors, memory, graphics cards, etc.) has been possible only because of very close attention to analogue principles. Small devices (transistors, MOSFETs) in these applications are faster than larger 'equivalents' that occupy more silicon. Reduced supply voltages reduce power dissipation (a very analogue concept) and improve speed due to lower parasitic capacitance and/or inductance. If IC designers were to work only in the 'digital' domain, none of what we have today would be possible, because the very principles of electronics would not have been considered. It's instructive to read a book (or even a web page) written by professional IC designers. The vast majority of their work to perfect the final design is analogue, not digital.

It must be said that if you don't even understand the concept of 'R = V / I' then you are not involved in electronics. You might think that programming an Arduino gives you some credibility, but that is only for your ability to program - it has nothing to do with electronics. If this is the case for you, I suggest that you read some of the Beginner's Guides on the ESP website, as they will be very helpful to improve your 'real' electronics skills.

There should be a 'special' place reserved to house the cretins who advertise such gems as a "digital tyre ('tire') inflator" (and no, I'm not joking) and other equally idiotic concepts. As expected, the device is (and can only be) electromechanical because it's a small air compressor, and it might incidentally (or perhaps 'accidentally') have a digital pressure gauge. This does not make it digital, and ultimately the only thing that's digital is the readout - the pressure sensor is pure analogue. Unfortunately, the average person has no idea of the difference between analogue and digital, other than to mistakenly assume that 'digital' must somehow be 'better' due to a constant barrage of advertising for the latest digital products.

The introduction should be understood by anyone who knows analogue design well, but it may not mean much to those who know only digital systems from a programming perspective. This is part of the 'disconnect' between analogue and digital, and unless it is bridged, some people will continue to imagine that impossible things can be done because a computer is being used. In some cases, you may find people who think that 'digital' is the be-all and end-all of electronics, and that analogue is dead.

A great deal of the design of analogue circuitry is based on the time domain. This is where we have concepts (mentioned above) of voltage, current, frequency and phase. These would not exist in an 'ideal' digital system, because it is interested only in logic states. However, it quickly becomes apparent that time, voltage, current and frequency must play a part. A particular logic state can't exist for an infinite or infinitesimal period, nor can it occur only when it feels like it.

All logic systems have defined time periods where data must be present at (or above) a minimum voltage level, and for some minimum time before it can be registered by the receiving device. The voltage (logic level) and current needed to trigger a logic circuit are both analogue parameters, and that circuit needs to be able to source or sink current at its output. This is called 'logic loading' or 'fan-out' - how many external gates can be driven from a single logic output. Datasheets often tabulate this in terms of voltage vs. current - decidedly non-digital specifications.

The digital world is usually dependent on a 'clock' - an event that occurs at regular intervals and signals the processor to move to the next instruction. This may mean that a calculation (or part thereof) should be performed, a reading taken from an input or data should be sent somewhere else. The timing can (in a few cases) be arbitrary, and simply related to an event in the analogue circuitry, such as pressing a button or having a steady state signal interrupted.

The power supply for a digital circuit needs to have the right voltage, and be able to supply enough current to run the logic circuits. Most microcontroller modules provide this info in the data sheet, but many users won't actually understand this. They will buy a power supply that provides the voltage and current recommended in the datasheet. This might be 5V at 2A for a typical microcontroller project board. If someone were to offer them a power supply that could supply 5V at 20A, it may be refused, on the basis that the extra current may 'fry' their board.

Lest the reader think I'm just making stuff up, I can assure you that I have seen forum posts where this exact scenario has been seen. Some users will listen to reason, but others will refuse to accept that their board (or a peripheral) will only draw the current it needs, and not the full current the supply can provide. This happens because people don't think they need to understand Ohm's law because they are working with software in a pre-assembled project board. Ohm's law cannot be avoided in any area of electronics, as it explains and quantifies so many common problems. Imagine for a moment that you have no idea how much current will flow through a resistance of 100 ohms when you supply it with 12V - you are unable to understand anything about how the circuit may or may not work!

There will be profound confusion if someone is told that LED lighting (for example) requires a constant current power supply, but that it also must have a voltage greater than 'X' volts. If you don't understand analogue, it's impossible to make sense of these requirements. I've even seen one person post that they planned to power a 100W LED from standard 9V batteries, having worked out that s/he needed 3 in series. It's patently clear that basic analogue knowledge is missing, but the person in question argued with every suggestion made. S/he flatly refused to accept that 9V batteries (around 500mA/ hour) would be unable to provide useful light for more than a few seconds (perhaps up to one minute) and the batteries would be destroyed - they are not designed to supply over 3A - ever. It goes without saying that the intended switching circuit was also woefully inadequate.

Almost without exception, beginner users of Arduino, Raspberry Pi, Beaglebone, and other microcontroller platforms imagine that they only need to understand the programming of the device, and as soon as they try to interface to a motor, proportional air valve, or even a lamp (usually LED) or a relay, the wheels fall off the project. The instruction sheets and user guides only go so far, and the instant something different is needed that is not specifically explained in the instructions or help files, the user is stopped in his/ her tracks. This is because the basics of analogue electronics are not only not known, but are considered irrelevant. Some of the questions I've seen on forum sites show an astonishing lack of understanding of the most basic principles. Some of the most basic and (to me) incredibly naive questions are asked in forum sites, and the questioner usually provides almost no information, not to hide something, but because they don't know what information they need to provide to get useful assistance.

Anyone who is reasonably aware of analogue basics will at least know to search for information when their project doesn't work as expected, but if your experience is only with digital circuits, you will be unable to understand the basic analogue concepts, and it's all deeply mysterious. All 'electronics' training needs to include analogue principles, because without that the real world of electronics simply makes no sense.

Digital systems use switching, so linearity is not an issue - until you have to work with analogue to digital converters (ADCs) or digital to analogue converters (DACs). In analogue systems, linearity is usually essential (think audio for example), but digital is either 'on' or 'off', one or zero. The ADC translates a voltage at a particular point in time into a number that can be manipulated by software. The number is (of course) represented in binary (two states), because the digital system as we use it can only represent two states, although some logic circuits include a third 'open circuit' state to allow multiple devices to access a single data bus.

While linearity is not normally a factor other than input/ output systems, the accuracy of a computer is limited by the number of bits it can process. For example, CD music is encoded into a 16 bit format, sampled 44,100 times each second (44.1kHz sampling rate). This provides 65,536 discrete levels - actually -32768 to +32767 because each audio sample is a signed 16 bit two's complement integer [ 1 ]. The levels are theoretically between 0V and 5V, but actually somewhat less because zero and 5V are at the limits of the ADCs used. These limits are due to the analogue operation of the digitisation process, and linearity must be very good or the digital samples will be inaccurate, causing distortion.

If we assume 3V peak to peak for the audio input (1V to 4V), each digital sample represents an analogue 'step' of 45.776µV, both for input and output. While it may seem that the processes involved are digital, they're not. Almost every aspect of an ADC is analogue, and we think of it as being digital because it spits out digital data when the conversion is complete. When you examine the timing diagram for any digital IC, there are limits imposed for timing. Data (analogue voltage signals) must be present for at least the minimum time specified before the system is clocked (setup time) and the data are accepted as being valid. The voltages, rise and fall times, and setup times are all analogue parameters, although many people involved in high level digital design don't see it that way.

This is partly because many of the common communication protocols are pre-programmed in microcontrollers and/ or processors (or in subroutine libraries) to ensure that the timings are correct, so the programmer doesn't need to worry about the finer details. This doesn't mean they aren't there, nor does it mean that it will always work as intended. A PCB layout error which affects the signal can make communication unreliable or even not work at all. The real reasons can only be found by looking at the analogue waveforms, unless you consider 'blind' trial and error to be a valid faultfinding technique. You may eventually get a result, but you won't really know why, and it will be a costly exercise.

Figure 1 - Analogue or Digital?

The above circuit is quite obviously analogue. There are diodes, transistors and resistors that make up the circuit, and the voltage and current analysis to determine operation conditions are all performed using analogue techniques. The circuit's end purpose? It's a two input NAND gate - digital logic! When 'In1' and 'In2' are both above the switching threshold (> 2V), the output will be low ('zero'), and if both inputs are low (less than ~1.4V), the output remains high ('one') [ 2 ]. There is no way that the circuit can be analysed using digital techniques. These will allow you to verify that it does what it claims, but not how it does it!

The threshold voltages are decidedly analogue, because while the input transistors may start to conduct with a voltage below 1.2V or so, the operation will be unreliable and subject to noise. This is why the datasheet tells you that a 'high' should be greater than 2V, and a 'low' should be below 0.7V. Ignore these at your peril, but without proper analysis you may imagine that a 'high' is 5V and a 'low' is zero volts. That doesn't happen in any 'real world' circuit (close perhaps, but not perfect!) It should be apparent that the output of the NAND gate can never reach 5V, because there's a resistor, transistor and a diode in series with the positive output circuit. Can this be analysed using only digital techniques? I expect the answer is obvious.

How does it work? The two inputs are actually a single transistor with two emitters in the IC, but the two transistors behave identically. If either 'In1' or 'In2' is low, Q2 has no base current and remains off because the base current (via R1) is 'stolen' by either Q1A or Q1B. The totem pole output stage is therefore pulled high (to about 4.7V) by the current through R2. When 'In1' and 'In2' are high, the base current for Q2 is no longer being stolen, and it turns on with the current provided by R1. This then turns on Q3 and turns off Q2, so the output is low. You will have to look at the circuit and analyse these actions yourself to understand it. The main point to take away from all of this is that a 'digital' gate is an analogue circuit!

The above is only a single example of how the lines between analogue and digital are blurred. If you are using the IC, you are interested in its digital 'truth table', but if you were to be asked to design the internals of the IC, you can only do that using analogue design techniques. Note that in the actual IC, Q1A and Q1B are a single transistor having two emitters - something that's difficult using discrete parts. The truth table for a 2 input NAND gate is next - this is what you use when designing a digital circuit.

| In 1 | In 2 | Out |

| 0 (< 0.7V) | 0 (< 0.7V) | 1 (> 3V - load dependent) |

| 1 (> 2V) | 0 (< 0.7V) | 1 (> 3V) |

| 0 (< 0.7V) | 1 (> 2V) | 1 (> 3V) |

| 1 (> 2V) | 1 (> 2V) | 0 (< 0.1V) |

The truth table for a simple NAND gate is hardly inspiring, but it describes the output state that exists with the inputs at the possible logical states. It does not show or explain the potential unwanted states that may occur if the input switching speed is too low, and this may cause glitches (very narrow transitions that occur due to finite switching times, power supply instability or noise). The voltage levels shown in the truth table are typical levels for TTL (transistor/ transistor logic).

It should be pretty clear that while the circuit is 'digital', almost every aspect of its design relies on analogue techniques. If you were to build the circuit shown, it will work in the same way as its IC counterpart, but naturally takes up a great deal more PCB real estate than the entire IC, which contains four independent 2-input NAND gates. The IC version will also be faster, because the integrated components are much smaller and there is less stray capacitance.

It is educational to examine the circuit carefully, either as a simulation or in physical form. The exact circuit shown has been simulated, and it performs as expected in all respects. All other digital logic gates can be analysed in the same way, but the number of devices needed quickly gets out of hand. In the early days of digital logic, simpler circuits were used and they were discrete. ICs as we know them now were not common before 1960, when the first devices started to appear at affordable prices. It's also important to understand that when the IC is designed, the engineer(s) have to consider the analogue properties.

This is basically a nuisance, but if the analogue behaviour is ignored, the device will almost certainly fail to live up to expectations. No designer can avoid the embedded analogue processes in any circuit, and high-speed digital is usually more demanding of analogue understanding than many 'true analogue' circuits! This is largely due to the parasitic inductance and capacitance of the IC, PCB or assembly, and the engineering is often in the RF (radio frequency) domain.

The earliest digital computers used valves (vacuum tubes), and were slow, power hungry, and very limited compared to anything available today. The oldest surviving valve computer is the Australian CSIRAC (first run in 1949), which has around 2,000 valves and was the first digital computer programmed to play music. It used 30kW in operation! It's difficult to imagine a 40 square metre (floor size) computer that had far less capacity than a mobile (cell) phone today. Check the Museums Victoria website too. It should be readily apparent that a valve circuit is inherently analogue, even when its final purpose is to be a digital computer.

Before digital computers, analogue computers were common, many being electromechanical or even purely mechanical. Electronic analogue computers were the stimulus to develop the operational amplifier. The opamp (or op-amp) is literally an amplifier capable of mathematical operations such as addition and subtraction (and even multiplication/ division using logarithmic amplifiers). Needless to say the first opamps used valves.

All digital signals are limited to a finite number of discrete levels, defined by the number of bits used to represent a numerical value. An 8-bit system is limited to 256 discrete values (0-255), and intermediate voltages are not available. Conversion routines can perform interpolation (which can include up-sampling), increasing the effective number of bits or sampling rate, and calculating the intermediate values by averaging the previous and next binary number. These 'new' values are not necessarily accurate, because the actual (analogue) signal that was used to obtain the original 8 bits is not available, so the value is a guess. It's generally a reasonable guess if the sampling rate is high enough compared to the original signal frequency, but it's still only a calculated probability, and is not the actual value that existed.

An analogue signal varies from its minimum to maximum value as a continuous and effectively infinite number of levels. There are no discontinuities, steps or other artificial limits placed on the signal, other than unavoidable thermal and 1/f (flicker/shot) noise, and the fact that the peak values are limited by the device itself or the power supply voltages. There's no sampling, and there's no reason that a 1µs pulse can't coexist with a 1kHz sinewave. A sampling system operating at 44.1kHz might pick up the 1µs pulse occasionally (if it coincides with a sample interval), but an analogue signal chain designed for the full frequency range involved can reproduce the composite waveform quite happily, regardless of the repetition rate of the 1µs pulse.

There is also a perceived accuracy with any digital readout [ 3 ]. Because we see the number displayed, we assume that it must be more accurate than the readout seen on an analogue dial. Multimeters are a case in point. Before the digital meter, we measured voltages and currents on a moving coil meter, with a pointer that moved across the dial until it showed the value. Mostly, we just made a mental note of the value shown, rounding it up or down as needed. For example, if we saw the meter showing 5.1V we would usually think "ok, just a tad over 5V".

A digital meter may show the same voltage as 5.16V, and we actually have to read the numbers. Is the digital meter more accurate? We tend to think it is, but in reality it may be reading high, and the analogue meter may have been right all along. There is a general perception that if we see a number represented by normal digits, that it is 'precise', whereas a number represented by a pointer or a pair of hands (a clock) is 'less precise'. Part of the reason is that we get a 'sense' of an analogue display without actually decoding the value shown. We may glance at a clock (with hands) and know if we are running late, but if someone else were to ask us what time it is, we'll usually have to look again to decode the display into spoken numbers.

Figure 2 - Analogue And Digital Meter Readouts

In the above, if the meter range is set for 10V full scale, the analogue reading is 7.3 volts (near enough). Provided the meter is calibrated, that's usually as accurate as you ever need. The extra precision (real or imagined) of the digital display showing 7.3012 is of dubious value in real life. It's a different matter if the reading is varying - the average reading on a moving coil meter is easily read despite the pointer moving a little. A digital display will show changing numbers, and it's close to impossible to guess the average reading. The pointer above could be moving by ±0.5V and you'll still be able to get a reading within 100mV fairly easily. Mostly, you'd look at the analogue meter and see that the voltage shown was within the range you'd accept as reasonable. This is more difficult when you have to decode numbers.

The same is true of the speedo (speedometer) in a car - we can glance at it and know we are just under (or over) the speed limit, but without consciously reading our exact speed. A digital display requires that we read the numbers. Aircraft (and many car) instruments show both an analogue pointer and a digital display, so the instant reference of the pointer is available. While we will imagine that the digital readout is more accurate, the simple reality is that both can be equally accurate or inaccurate, depending (for example) on something as basic as tyre inflation. An under-inflated tyre has a slightly smaller rolling radius than one that's correctly inflated, so it will not travel as far for one rotation and the speedo will read high.

Digital readouts require more cognitive resources (in our brains) than simple pointer displays, and the perception of accuracy can be very misleading. A few car makers have tried purely digital speedos and most customers hated them. The moving pointer is still by far the preferred option because it can be read instantly, with no requirement to process the numbers to decide if we are speeding or not. There's a surprisingly large amount of info on this topic on the Net, and the analogue 'readout' is almost universally preferred.

Most of the parameters that we read as numbers (e.g. temperature, voltage, speed, etc.) are analogue. They do not occur in discrete intervals, but vary continuously over time. In order to provide a digital display, the continuously varying input must be digitised into a range of 'steps' at the selected sampling rate. Then the numbers for each sample can be manipulated if necessary, and finally converted into a format suitable for the display being used (LED, LCD, plasma, etc.). If the number of steps and the sample rate are both high enough to represent the original signal accurately, we can then read the numbers off the display (or listen to the result) and be suitably impressed - or not.

Within any digital system, it's possible to bypass the laws of physics. Consider circuit simulation software for example. A mathematically perfect sinewave can be created that has zero distortion, meaning it is perfectly linear. You can look at signals that are measured in picovolts, and the simulator will let you calculate the RMS value to many decimal places. There's no noise (unless you tell the simulator that you want to perform a noise analysis). While this is all well and good, if you don't understand that a real circuit with real resistance will have (real) noise, then the results of the simulation are likely to be useless. The simulator may lead you to believe that you can get a signal to noise ratio of 200dB, but nature (the laws of physics) will ensure failure.

To put the above into perspective, the noise generated by a single 200 ohm resistor at room temperature is 257nV measured from 20Hz to 20kHz. This is a passive part, and it generates a noise voltage and current dependent on the value (in ohms), the temperature and bandwidth. If the bandwidth is increased to 100kHz, the noise increases to 575nV. Digital systems are not usually subject to noise constraints until they interface to the analogue domain via an ADC or DAC, but other forms of noise can affect a digital data stream. Digital radio, TV and internet connections (via ADSL, cable or satellite) use analogue front end circuitry, so noise can cause problems.

In all cases, if you have an outdoor antenna with a booster amplifier, the booster is 100% analogue, and so is most of the 'digital' tuner in a TV or radio. The signal remains analogue through the tuner, IF (intermediate frequency) stages and the detector. It's only after detection and demodulation that the digital data stream becomes available. If you want to see the details, a good example is the SN761668 digital tuner IC from Texas Instruments. There are others that you can look at as well, and it should be apparent that the vast majority of all signal processing is analogue - despite the title 'digital tuner'.

The same applies to digital phones, whether mobile (cell) phones or cordless home phones. Transmitters and receivers are analogue, and the digitised speech is encoded and decoded before transmission and after reception respectively.

The process of digitisation creates noise, due to quantisation (the act of digitally quantifying an analogue signal). Unsurprisingly, this is called quantisation noise, and the distortion artifacts created in the process are usually minimised by adding 'dither' during digitisation or when the digital signal is returned to analogue format. Dither is simply a fancy name for random noise! Dither is used with all digital audio and most digital imaging, as well as many other applications where cyclic data will create harmonic interference patterns (otherwise known as distortion). A small amount of random noise is usually preferable to quantisation distortion.

Few digital systems are useful unless they can talk to humans in one way or another, so the 'ills' of the analogue domain cannot be avoided. It's all due to the laws of physics, and despite many attempts, no-one has managed to circumvent them. If you wish to understand more about noise, see Noise In Audio Amplifiers.

It's essential to understand what a simulator (or indeed any computer system) can and cannot do, when working purely in the digital domain. Simulated passive components are usually 'ideal', meaning that they have no parasitic inductance, capacitance or resistance. Semiconductor models try to emulate the actual component, but the degree of accuracy (especially imperfections) may not match real parts. If you place two transistors of the same type into the schematic editor, they will be identical in all respects. You need to intervene to be able to simulate variations that are found in the physical parts. Some simulators allow this to be done easily, others may not. 'Generic' digital simulator models often only let you play with propagation time, and all other functions are 'ideal'. Most simulators won't let you examine power supply glitches (caused by digital switching) unless the track inductance and capacitance(s) are inserted - as analogue parts.

The 'ideal' condition applies to all forms of software. If calculations are made using a pocket scientific calculator or a computer, the results will usually be far more precise that you can ever measure. Calculating a voltage of 5.03476924 volts is all well and good, but if your meter can only display 3 decimal places the extra precision is an unwelcome distraction. If that same meter has a quoted accuracy of 1%, then you should be aware that exactly 5V may be displayed as anything between 4.950V and 5.050V (5V ±50mV). You also need to know that the display is ±1 digit as well, so the reading could now range from 4.949 to 5.051. The calculated voltage is nearly an order of magnitude more precise than we can measure. No allowance has even been made for component tolerance yet, and this could make the calculated value way off before we even consider a measurement!

If the meter hasn't been calibrated recently, it might be off by several percent and you'd never know unless another meter tells you something different. Then you have to decide which one is right. In reality, both could be within tolerance, but with their error in opposite directions. Bring on a third meter to check the other two, and the fun can really start. Now, measure the same voltage with an old analogue (moving coil) meter. It tells you that the voltage is about 5V, and there's no reason to question an approximation - especially if the variation makes no difference to the circuit's operation.

Someone trained in analogue knows that "about 5V" is perfectly ok, but someone who only knows the basics of digital systems will be nonplussed. Because they imagine that digital logic is precise, the variation is cause for concern. I get emails regularly asking if it's alright that nominal ±15V supply voltages (from the P05 power supply board) measure +14.6V and -15.2V (for example). The answer is "yes, that's fine". This happens because the circuits are analogue, and people ask because they expect precision. Very precise voltages can be created, but most circuits don't need them. To an 'old analogue man', "about 5V" means that the meter's pointer will be within 1 pointer width of the 5V mark on the scale - probably between 4.9 and 5.1 volts. Likewise, "about ±15V" is perfectly alright.

Ultimately, everything we do (or can do) in electronics is limited by the laws of physics. In the early days, the amplifying devices were large vacuum tubes (aka valves), and there were definite limits as to their physical size. Miniature valves were made, but they were very large indeed compared to a surface mount transistor, and positively enormous compared to an integrated circuit containing several thousand (or million) transistors. None of this means that the laws of physics have been altered, what has changed is our understanding of what can be achieved, and working out better/ alternative ways to reach the end result. Tiny switching transistors in computer chips get smaller (and faster) all the time, allowing you to perform meaningless tasks faster than ever before  .

.

An area where the laws of physics really hurt analogue systems is recordings. Any quantity can be recorded by many different methods, but there are two stand-out examples - music and film. When an analogue recording is copied, it inevitably suffers from some degradation. Each successive copy is degraded a little more. The same thing happens with film and also used to be an issue with analogue video recordings. Each generation of copy reduces the quality until it no longer represents the original, and it becomes noisy and loses detail.

Now consider a digital copy. The music or picture is represented by a string of ones and zeros (usually with error correction), which can be copied exactly. Copies of copies of copies will be identical to the original. There is no degradation at all. There are countless different ways the data can be stored, but unlike a printed piece of engraved metal, ink on paper (or papyrus) or a physical photograph, there is an equally countless range of issues that can cause the data to disappear completely. The physical (analogue) items can also disappear too, but consider that we have museums with physical artifacts that are thousands of years old. Will a flash drive achieve the same longevity?

Will anyone look after our digital data with the same diligence? If all of your photos are on your smartphone or a flash drive and it fails, is lost or stolen, they are probably gone forever. The long forgotten stash of old grainy 'black and white' photos found in the back of an old dresser or writing desk can bring untold delight, but I expect that finding a flash drive in 50 years time will not have the same outcome. It's probable that someone might recognise it as an 'ancient storage device', but will they be able to extract the data - assuming the data even survived?

By way of contrasts, think of vinyl recordings and floppy discs. I have vinyl that's 50 years old, and not only can I still play it, the music thereon is perfectly recognisable in all respects. The quality may not be as good as other vinyl that's perhaps only 30 years old, but the information is still available to me and countless others. Earlier records may be over 80 years old, and are still enjoyed. How many people reading this can still access the contents of a floppy disc? Not just the 'newer' 3½" ones, but earlier 8" or 5¼" floppies? Very few indeed, yet the original floppy is only 47 years old (at the time of writing). Almost all data recorded on them is lost because few people can access it. If it could be accessed, is there a computer that could still extract the information? How much digital data recorded on any current device will be accessible in 50 years time?

Before anyone starts to get complacent, let's look at a pair of circuits. Both are CMOS (complementary metal oxide semiconductor), so they both have N-Channel and P-Channel transistors. Circuit 2 has some resistors that are not present in Circuit 1, and that will (or should) be a clue as to what each circuit might achieve. I quite deliberately didn't show any possible feedback path in either circuit though.

Figure 3 - Two Very Different CMOS Circuits

Look at the circuits carefully, and it should be apparent that one is designed for linear (analogue) applications, and the other is not. What you may not realise is that the non-linear circuit (Circuit 1) actually can be run in linear mode, and the linear circuit (Circuit 2) can be run in non-linear mode. "Oh, bollocks!" said Pooh, as he put away his soldering iron and gave up on electronics for good  .

.

Circuit 1 is a CMOS NAND gate, and Circuit 2 is a (highly simplified) CMOS opamp, with 'In 1' being the non-inverting, and 'In 2' is the inverting input. Early CMOS ICs (those without an output buffer, not the 4011B shown in Circuit 1) were often used in linear mode. While performance was somewhat shy of being stellar (to put it mildly), it works and was an easy way to get some (more or less) linear amplification into an otherwise all-digital circuit, without having to add an opamp. When buffered outputs became standard (4011B, which includes Q5-Q8) linear operation caused excessive dissipation.

The lines become even more blurred when we look at a high speed data bus. When the tracks on a PCB or wires in a high speed digital cable become significant compared to wavelength, the tracks or wires no longer act as simple conductors, but behave like an RF transmission line. No-one should imagine that transmission lines are digital, because this is a very analogue concept. Transmission lines have a characteristic impedance, and the far end must be properly terminated. If the terminating impedance is incorrect, there are reflections and standing waves within the transmission line, and these can seriously affect the integrity of the signal waveform, as discussed next.

In the Coax article, there's quite a bit of info on coaxial cables and how they behave as a transmission line, but many people will be unaware that tracks on a PCB can behave the same way. The same applies to twisted pair and ribbon cables. If PCB tracks are parallel and don't meander around too much, the transmission line will be fairly well behaved and is easily terminated to ensure reliable data transfer. There is considerable design effort needed to ensure that high speed data transmission is handled properly [ 4 ]. This is not a trivial undertaking, and the misguided soul who runs a shielded cable carrying fast serial data may wonder why the communication link is so flakey. If s/he works out the characteristic impedance and terminates the cable properly, the problem simply goes away [ 5 ]. This is pure analogue design. It might be a digital data stream, but moving it from one place to another requires analogue design experience.

This used to be an area that was only important for radio frequency (RF) engineering, and in telephony where cables are many kilometres in length. The speed of modern digital electronics has meant that even signal paths of a few 10s of millimetres need attention, or digital data may be corrupted. A pulse waveform may only have a repetition rate of (say) 2MHz, but it is rectangular, so it has harmonics that extend to well over 100MHz. Even if the rise and fall times are limited (8.7ns is shown in the following figures), there is significant harmonic energy at 30MHz, a bit less at 50MHz, and so on. These harmonic frequencies can exacerbate problems if a transmission line is not terminated properly.

It's not only the cables that matter when a signal goes 'off-board', either to another PCB in the same equipment or to the outside world. Connectors become critical as well, and higher speeds place greater constraints on the construction techniques needed for connectors so they don't seriously impact on the overall impedance. There are countless connector types, and while some are suited to high speed communications, others are not. While an 'ordinary' connector might be ok for low speed data, you need to use matched cables and connectors (having the same characteristic impedance) if you need to move a lot of data at high speeds. This is one of the reasons that there are so many different connectors in common use.

Figure 4 - Transmission Line Test Circuit

This test circuit is used for the simulations shown below. Yes. it's a simulation, but this is something that simulators do rather well. Testing a physical circuit will show less effect, because the real piece of cable (or PCB tracks) will be lossy, and this reduces the bad behaviour - at least to a degree. The simulations shown are therefore worst case - reality may not be quite as cruel. Note too that all simulations used a zero ohm source to drive the transmission line. When driven from the characteristic impedance, the effects are greatly reduced. Most dedicated line driver ICs have close to zero ohms output impedance, so that's what was used. This is a situation where everything matters! [ 6 ]

In each of the following traces, the red trace is the signal as it should be at the end of the line (with R2 set for 120 Ohms - the actual line impedance). For the tests, R2 was arbitrarily set for 10k, as this is the sort of impedance you might expect from other circuitry. Note that if the source has an impedance of 120 ohms, there is little waveform distortion, but the signal level is halved if the transmission line is terminated at both ends.

The input signal is a 'perfect' 10MHz squarewave, and that is filtered with R1 and C1 (a 100MHz low pass filter) to simulate the performance you might expect from a fairly fast line driver IC. The green trace shows what happens when the line is terminated with 10k - it should be identical to the red trace!

Figure 5 - Mismatched Transmission Line Behaviour

In the above drawing, you can see the effect of failing to terminate a transmission line properly. The transmission line itself has a delay time of just 2ns (a distance of about 300mm using twisted pair cable or PCB tracks) and a characteristic impedance of 120 ohms. There is not much of a problem if the termination impedance is within ±50%, but beyond that everything falls apart rather badly [ 7 ]. Just in case you think I might be exaggerating the potential problems, see the following oscilloscope capture.

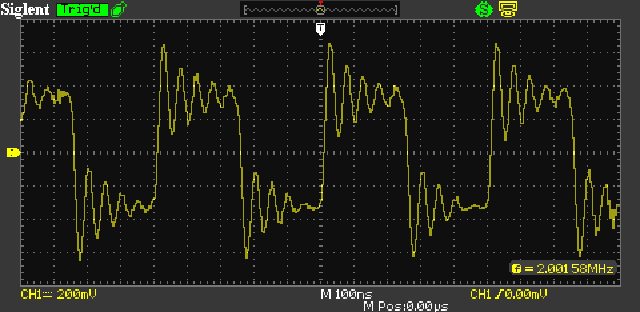

Figure 5A - Oscilloscope Capture Of Mismatched Transmission Line

The above is a direct capture of a 2MHz squarewave, feeding a 1 metre length of un-terminated 50 ohm coaxial cable. The source impedance is 10 ohms, ensuring a fairly severe mismatch, but not as bad as when the source impedance is much lower. This has been included to show that the simulations are not something dreamed up, but are very real and easily replicated. It is quite obvious that this cannot be viewed as a 'digital' waveform, regardless of signal levels. The 'ripples' are not harmonically related to the input frequency, but are due to the delay in the cable itself. If the input frequency is changed, the ripples remain the same (frequency, amplitude and duration). Each cycle of the reflection waveform takes about 65ns, so the frequency is a little over 15MHz. Note that the peak amplitude is considerably higher than the nominal signal level (as shown in Figure 5).

An electrical signal in a vacuum travels at 300 metres/ µs, or around 240m/ µs in typical cables (0.8 velocity factor). This eventually works out to be roughly 2ns for each 240mm or so of PCB trace. A PCB track that wanders around for any appreciable distance delays the signal it's carrying, and if not terminated properly the signal can become unusable. It should be apparent that things can rapidly go from bad to worse if twisted pair or coaxial cables are used over any appreciable distance and with an impedance mismatch (high speed USB for example). Proper termination is essential.

It is clearly wrong to say that any of this is digital. While the signal itself may start out as a string of ones and zeros (e.g. +Ve and GND respectively), the way it behaves in conductors is dictated solely by analogue principles. The term 'digital' applies to the decoded data that can be manipulated by gates, microprocessors, or other logic circuits. The transmission of signals requires (analogue) RF design principles to be adopted.

You may think that the protection diodes in most CMOS logic ICs will help. Sadly, they can easily make things a great deal worse, as shown next. However, this will only happen if the source signal level is from GND to Vcc, where Vcc is the logic circuit supply voltage. At lower signal levels (such as 1-4V in a 5V system for example) the diodes may not conduct and mayhem might be avoided.

Figure 6 - Mismatched Transmission Line With Protection Diodes

If ringing causes the input to exceed an IC's maximum input voltage limits (as shown above), the protection diodes will conduct. The 0-5V signal is now close to unusable - it does not accurately show 'ones and zeros' as they were transmitted. When the diodes conduct, the transmission line is effectively terminated with close to zero ohms. This creates reflections that corrupt the signal so badly that the chance of recovering the original digital data is rendered very poor. If this happened to a video signal, the image would be badly pixellated. It may be possible to recover the original data with (hopefully) minimal bit errors by using a filter to remove the high frequency glitches, but proper termination solves the problem completely.

It is a mistake to imagine that because digital is 'ones and zeros', it is not affected by the analogue systems that transport it from 'A' to 'B'. There is a concept in digital transmission called the BER (bit error rate), and this is an indicator of how many bits are likely to be corrupted in a given time (usually 1 second, or per number of bits). For example, HDMI is expected to have a BER of 10-9 - one error every billion bits, which is around one error every 8 seconds at normal HDMI transmission speed for 24 bit colour and 1080p.

Unlike TCP/IP (as used for network and internet traffic), HDMI is a one-way protocol, so the receiver can't tell the transmitter that an error has occurred (although error correction is used at the receiving end). The bit-rate is sufficient to ensure that a pixel with incorrect data (an error) will be displayed for no more than 16ms, and will not be visible. Poor quality cable may not meet the impedance requirements (thus causing a mismatch), and may show visible errors due to an excessive BER. Cables can (and do) matter, but they require proper engineering, not expensive snake oil.

Another widely used transmission line system is the SATA (serial AT attachment) bus used for disc drives in personal (and industrial) computers. This is a low voltage (around 200-600mV, nominally ±500mV p-p) balanced transmission line, which is terminated with 50 ohms. Because of the high data rate (1.5MB/s for SATA I, 3.0MB/s for SATA II), the driver and receiver circuitry must match the transmission line impedance, and be capable of driving up to 1 metre of cable (2 metres for eSATA). If you want to know more, a Web search will tell you (almost) everything you need to know. The important part (that most websites will not point out) is that the whole process is analogue, and there is nothing digital involved in the cable interfaces. Yes, the data to and from the SATA cable starts and ends up as digital, but transmission is a fully analogue function.

Commercial SATA line driver/ receiver ICs such as the MAX4951 imply that the circuitry is digital, but the multiplicity of 'eye diagrams' and the 50Ω terminating resistors on all inputs and outputs (shown in the datasheet) tell us that the IC really is analogue. 'True' digital signals are shown with timing diagrams (which are themselves analogue if one wants to be pedantic), but not with eye diagrams, and terminating resistors are not used with most logic ICs except in very unusual circumstances (I can't actually think of any at the moment). While there is no doubt at all that you need to be a programmer to enable the operating system to use a SATA driver IC in a computer system, the internal design of the cable drivers and receivers is analogue.

The 'eye diagram' is so called because it resembles eyes (or perhaps spectacles). The diagram is what you will see on an oscilloscope if the triggering is set up in such a way as to provide a 'double image', where positive and negative going pulses are overlayed. An ideal diagram would have nice clean lines to differentiate the transitions, but noise, jitter (amplitude or time) and other factors can give a diagram that shows the signal may be difficult to decode. The eye diagram below is based on single snapshots of a signal with noise, but the 'real thing' will show an accumulation of samples.

Figure 7 - 'Eye Diagram' For Digital Signal Transmission

The central part of each 'eye' (green bordered area in the first eye) shows a space that is clear of noise or ringing created by poor termination. The larger this open section compared to the rest of the signal the better, as that means there is less interference to the signal, and a clean digital output (with a low BER) is more likely. Faster rise and fall times improve things, but to be able to transmit a passable rectangular (pulse) waveform requires that the entire transmission path needs a bandwidth at least 3 to 5 times the pulse frequency. The blue line shows the signal as it would be with no noise or other disturbance. Note that jitter (unstable pulse widths) is usually the result of noise that make it difficult to determine the zero-crossing points of the waveform (where the blue lines cross).

Some form of filtering and/ or equalisation may be used prior to the signal being sampled to re-convert the analogue electrical signal back to digital for further processing, display, etc. It is clear that the signals shown above aren't digital. They may well be carrying digitally encoded data, but the signal itself is analogue in all respects. Fibre optic transmission usually has fewer errors than cable, but the transmission and reception mechanisms are still analogue.

To give you an idea of the signal levels that are typical of a cable internet connection, I used the diagnostics of my cable modem to look at the signal levels and SNR (signal to noise ratio). Channel 1 operates at 249MHz, has a SNR of 43.2dB with a level of 7.3dBmv (2.31mV). Just because a system is supposedly digital, the levels involved are low, and it would be unwise to consider it as a 'digital' signal path. The modulation scheme used for cable internet is QAM (quadrature amplitude modulation) which is ... analogue (but you already guessed that). QAM is referred to as a digital encoding technique, but that just means that digital signal streams are accommodated - it does not mean that the process is digital (other than superficially).

Some may find all of this confronting. It's not every day that you are told that what you think you know is largely an illusion, and that everything is ultimately brought back to basic physics, which is analogue through and through. The reality is that you can cheerfully design microcontroller applications and expect them to work. Provided you are willing to learn about simple analogue design, you'll be able to interface your project to anything you like. The main thing is that you must accept that analogue is not 'dead', and it's not even a little bit ill. It is the foundation of everything in electronics, and deserves the greatest respect.

If you search for "analogue vs digital", some of the explanations will claim that analogue is about 'measuring', while digital is 'counting'. It's implied (and stated) that measuring is less precise than counting, so by extension, digital is more 'accurate' than analogue. While it's an interesting analogy in some ways, it's also seriously flawed. It may not be complete nonsense, but it comes close.

In the majority of real-world cases, the quantity to be processed will be an analogue function. Time, weight (or pressure), voltage, temperature and many other things to be processed are analogue, and can only be represented as a digital 'count' after being converted from the analogue output of a vibrating crystal, pressure sensor, thermal sensor or other purely analogue device. Digital thermometers do not measure temperature digitally, they digitise the output of an analogue thermal sensor. The same applies for many other supposedly digital measurements.

If physical items interrupt a light beam, the (analogue) output from the light sensor can be used to increment a digital counter, and that will be exact - provided there are no reflections that cause a mis-count. So, even the most basic 'true' digital process (counting) often relies on an analogue process that's working properly to get an accurate result. Fortunately, it's usually easy enough to ensure that a simple 'on-off' sensor only reacts to the items it's meant to detect. However, this shows that the 'measuring vs. counting' analogy is flawed, so we should discount that definition because it's simply not true in the majority of cases.

As noted earlier, much of the accuracy of digital products is assumed because we are shown a set of numbers that tell us the magnitude of the quantity being displayed. Whether it's a voltmeter showing 5.021V or a digital scale showing 47.8 grams, we assume it's accurate, because we see a precise figure. Everyone who reads the numbers will get the same result, but everyone reading the position of a pointer on a graduated scale will not get the same result. This might be because they are unaware of parallax error (look it up), or their estimation of an intermediate value (between graduations) is different from ours.

One way of differentiating analogue and digital is to deem analogue to be a system that includes irrational numbers (such as π - Pi), while digital is integers only. This isn't strictly true when you look at the output of a calculation performed digitally, but internally the fractional part of the number is not infinite. It stops when the processing system can handle no more bits. A typical 'digital' thermometer may only be able to show one decimal place, so it can show 25.2° or 25.3°, but nothing in between (this is quantisation error). The number displayed is still based on an analogue temperature sensing element, and it can only be accurate if calibrated properly.

You also need to consider an additional fact. In order for any ADC or DAC to provide an accurate representation of the original signal, it requires a stable reference voltage. If there is an error (such as a 5V reference being 5.1V for example), the digital signal will have a 2% error. With a DAC having the exact same reference voltage, the end result will be accurate, but if not - there is an error. The digital signal is clearly dependent on an analogue reference voltage being exactly the voltage it's meant to be. This fact alone makes nonsense of the idea that digital is 'more accurate' than analogue.

While this article may look like it is 'anti digital', that is neither the intention nor the purpose. Digital systems have provided us with so much that we can't live without any more, and it offers techniques that were impossible before the computer became a common household item. CDs, digital TV and radio, and other 'modern marvels' are not always accepted by some people, so (for example) there are countless people who are convinced that vinyl sounds better than CD. One should ask if that's due to the medium or recording techniques, especially since there are now so many people who seem perfectly content with MP3, which literally throws away a great deal of the audio information.

Few would argue that analogue TV was better than 1080p digital TV, and we now have UHD (ultra high-definition) sets that are capable of higher resolution than ever before. It wasn't long ago that films in cinemas were shown using actual 35mm (or occasionally 70mm) film, but the digital versions have now (mostly) surpassed what was possible before. Our ability to store thousands of photographs, songs and videos on computer discs has all but eliminated the separate physical media we once used. Whether the digital version is 'as good', 'better' or 'worse' largely depends on who's telling the story - some film makers love digital, others don't, and it's the same with music.

The microphone (and loudspeaker) are perfect examples of analogue transducers. There is no such thing as a 'true' digital microphone, and although it's theoretically possible, the diaphragm itself will still produce analogue changes that have to be converted to digital - this will involve analogue circuitry! Likewise, there's no such thing as a digital loudspeaker, although that too is theoretically possible (although most must still be electromechanical - analogue). Ultimately, the performance will still be dictated by physics, which is (quite obviously) not in the the digital domain.

The important thing to understand is that all digital systems rely extensively on analogue techniques to achieve the results we take for granted. The act of reading the digital data from a CD or Blu-Ray disc is analogue, as is the connection between the PC motherboard and any disc drives. The process of converting the extracted data back into 'true' digital data streams relies on a thorough understanding of the analogue circuitry. Those who work more-or-less exclusively in the digital domain (e.g. programmers) often have little or no understanding of the interfaces between their sensors, processors and outputs. This can lead to sub-optimal designs unless an analogue designer has the opportunity to verify that the system integrity is not compromised.

Analogue engineering is indispensable, and it does no harm at all if a programmer learns the basic skills needed to create these interfaces (indeed, it's essential IMO). An interface can be as complex as an expensive oversampling DAC chip or as simple as a transistor turning a relay on and off. If the designer knows only digital techniques, the project probably won't end well. Any attempt at interfacing to a motor or other complex load is doomed, because there is no understanding of the physics principles involved. "But there's a chip for that" some may cry, but without understanding analogue principles, it's a 'black box', and if something doesn't work the programmer is stopped in his/ her tracks. Countless forum posts prove this to be true.

This article came about (at least in part) from seeing some of the most basic analogue principles totally misunderstood in many forum posts. At times I had to refrain from exclaiming out loud (to my monitor, which wouldn't hear me) that I couldn't believe the lack of knowledge - even of Ohm's law. Questions that are answered in the many articles for beginners on my site and elsewhere were never consulted. The first action when stuck is often to post a question (that in many cases doesn't even make any sense) on a forum.

1 Compact Disc Digital Audio - Wikipedia 2 Chapter 6, Gate Characteristics - McMaster University 3 Analog And Digital - ExplainThatStuff 4 High-Speed Layout Guidelines - Texas Instruments 5 Twisted Pair Cable Impedance Calculator - EEWeb 6a AN-991 Line Driving and System Design Literature - Texas Instruments 6b Transmission Line Effects Influence High Speed CMOS - AN-393, ON-Semi/ Fairchild 7 High Speed Layout Guidelines - SCAA082, Texas Instruments 8 As edge speeds increase, wires become transmission lines - EDN

Main Index

Main Index

Articles Index

Articles Index